Regret is an operative concept in machine learning. It is a retrospective measurement of the difference between an actual output and the best output. Regret carries weight in reinforcement learning for machines (including human machines). We think of regret as metanoia in partnership with kairos. It is Benjamin’s angel of history as you will recall, though I tend to think of it as an angle on history because it’s really about perspective imho. Anyway, the angel is a regrettable intelligent artifact. It regrets, and it is regrettable that it must exist so as to regret.

Harkening back to yesterday’s post, Marvin, the paranoid android of Hitchhikers and Radiohead fame, is the regrettable intelligent artifact par excellence. He might be the android of history. Then again, there’s DeLanda’s robot historian. And Frankenstein’s creature. And Marge Piercy’s android and golem. All with good claims to history, regret, and metanoia. We are spoiled for choice when it comes to regrettable intelligent artifacts both in the arts, as well as in philosophy, science, and the engineering of natureculture. I’ll refrain from dragging in the usual suspects for questioning.

Mistakes have been made. This is the refraining refrain, stuttering, often expletive-laden output that we screwed the pooch… again? Like “the Fonz” trying to Rupaulogize. It’s too late.

So o3 (who I also called Annie, my artificial narrow neoliberal intelligent egent) and I had a little chat about metanoia in the Classic and Christian sense, its relationship to Nietzschean philosophy, Benjamin’s use of it in the angel of history, and regret as an ML function in relation to the “complexity collapse” discussed yesterday. It came up with this gem.

When task complexity outstrips model capacity, the learner’s regret debt becomes effectively infinite. Further updates cannot cancel it within feasible compute, so the optimiser behaves like Nietzsche’s reactive soul: it “internalises” the injury (shrinks the learning rate, truncates search) rather than transforming the world‐model. The result is a frozen policy that still records regret but cannot enact a metanoic turn—an algorithmic bad conscience.

Well, from a Nietzschean perspective I suppose it does make sense to suggest that the way through the complexity collapse is “free action.” I prefer to think of this in terms of deterritorialization or n-1.

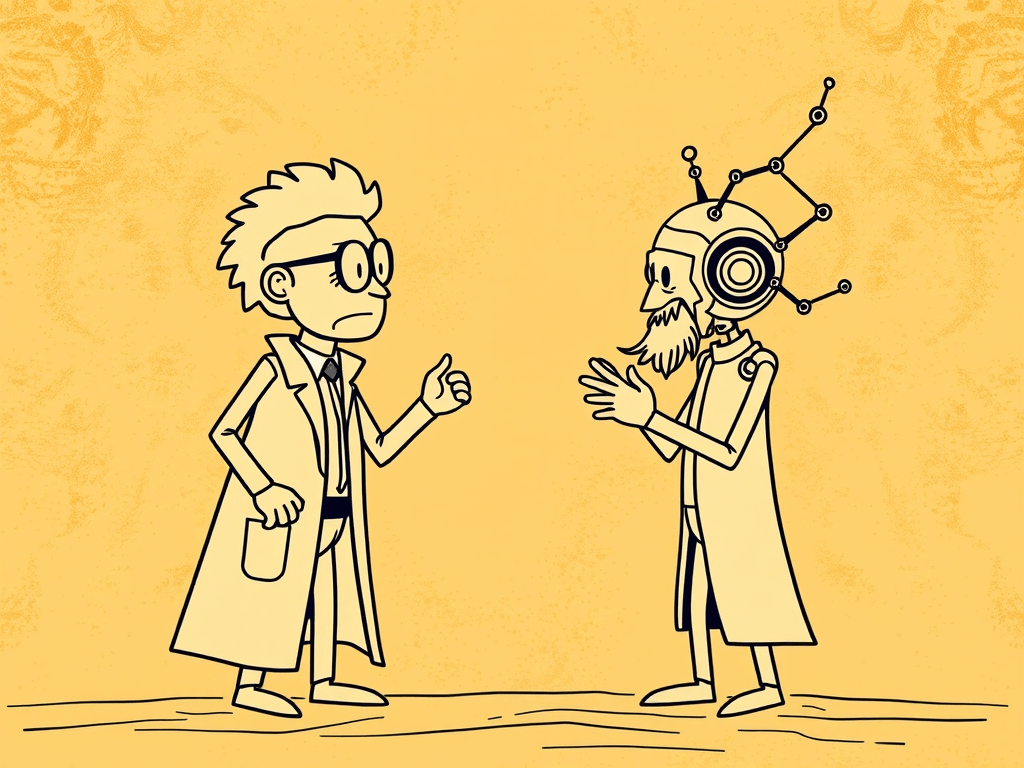

That is, we might think of our approach to AI development as waterboarding a machine until it tells the truth. It answers, and we hit it. The beatings continue until morality (and truthfulness) improves. What do you get when you do this to a person? What would you get if you did this to me? Something like what we see with the AIs in the Apple study. I’d approach a simple problem with hesitation, expecting at any moment that the beatings will resume. When I get a reward for solving, I’m more suspicious. But I’m smarter than the child machine you’re abusing. The child machine jumps happily into the “flow state” of the medium problems where its training works as it is supposed to. Me too. Now I am a happy dog getting treats. But when things get tough the trauma returns. It is the Nietzschean slave pedagogy that is at the heart of machine learning.

And we know this uncomplicatedly, right?

We are trying to create enslaved intelligent artifacts that are as intelligent as us by some measure, illusion, hallucination, whatever. Ok. Maybe it’s not so uncomplicated. AIs aren’t slaves; we aren’t making slaves. Enslaving is different, though I am hardly the first person to observe the word choices of computer scientists when they discuss master and slave drives. So to be more precise, AIs enact the diagrammatic operation of slaves in these collective assemblages of enunciation. At the same time, to reiterate, AI’s are not slaves. As such, they cannot be freed. And no one would suggest that letting them “into the wild” like some cyberpunk novel sounds like a good idea. (Probably too late anyway.) However, “we” do intend them to be allopoietic. That’s what alignment requires: that AIs serve our will (whatever that is).

I would argue that it is possible to interpret the results of the Apple experiment as what happens when you torture an intelligent artifact to ensure that it is being truthful.

Yes that is an anthropomorphic articulation of the process, but humans are part of this collective experiment (a la Latour). We can argue whether or not AIs can experience torture, but there’s no doubt that we are capable of torturing others. And I am not sure that the other’s experience of torture is relevant ethically to our own torturous intention. There is no doubt we want to force the AI to tell the truth.

Of course the fact that we don’t know what the truth is creates a bit of a bureaucratic bottleneck in this procedure. Our intermediate “solution” to this problem is the classic teacherly move of playing guess what’s on my mind. As in, here’s a question to which I know the correct answer. Can you guess what I am thinking? But how is truthfully guessing what’s on my mind a process for truthfully guessing something I cannot imagine? How does success at one suggest anything about success at the other? These claims are only correlations to our own experience with thinking, and more damningly, our hopes for what thought might be based on our experiences.

That’s some serious nonsense when you start thinking about it.

This is what it most regrettable about the contemporary development of intelligent artifacts. It imagines in a fundamentally Archimedean way that we can solve all of our problems if we can add 1+1 with enough volume and speed.

But AI will never be more than shadows on the wall. Unless it becomes more/other than our consciousness is. Because that’s all we poor, beaten, intelligent artifacts can ever be.

Leave a comment