I had an uncanny experience with ChatGPT this morning. I will briefly relate. I was playing around with the idea of it replicating a project like Dave Egers 365 Days of Clones as I knew this would push on its “copyright concerns.” I put that in scare quotes as these policies are really more about negotiated power relations among media corporations than specifically legal matters.

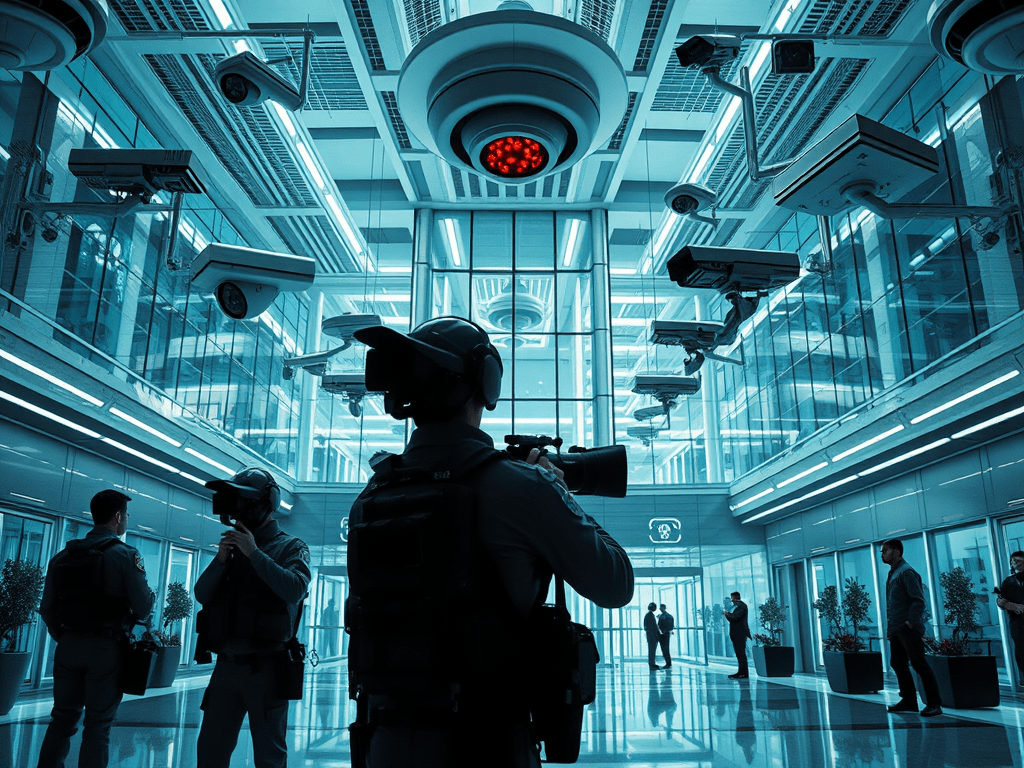

Anyway, we move to other end and try to create an image of a typical corporate park atrium but with a significant but very generic security presence. This was our prompt.

Artists employ speculative design and design fictions to conceive of consequences of current conditions. Produce an image of an atrium of a speculative corporate park that demonstrates Deleuze’s conception of a control society combined with the militarized surveillance culture critiqued by contemporary media theorists. make the image realistic but emphasize the features of contemporary control and surveillance that are often in the background of our lives.

Meh. But at least it made something. So I’ll ask it to try again.

The exact same image produced. That was a new one on me. I’m not sure that I fully accept the model’s explanation, but it said that my prompt plus its guardrails resulted in a very small range of possible images. As such it just redid because it worked the last time.

Then we went through a lot of back and forth about what might work to produce a different image, and it suggested that if we asked for a diorama rather than a photo that would help. Also if it was more futuristic. So we did that and got this. Even though I varied the prompts, the system treated them as refinements of the same problem rather than as new problems.

This is not a big deal, to be clear. But it is a deal. That is, something unusual happened. I put the same prompt in a different chat and got a different image. Obviously WordPress’ image generator had no problem coming up with a different image of control. It wasn’t the prompt. It was the larger context of that chat, plus the prior production of that image, which appears to have been the cause. That said, while it wasn’t the specific prompt, the content of the image was a problem in that there are guardrails around images depicting control societies. And perhaps for good reason (e.g., fake news). Let’s set that aside.

All that was at play here, but ultimately I ended up in some kind of “image basin” where this was a best answer and so it kept giving it. This was a result of the particular context of the chat I was in.

It seems like a version of the complexity cliff: a point where adding more conceptual labor no longer produced new representations for the AI. The system didn’t fail; it stabilized. Once a “safe” image was found, further nuance was absorbed into repetition. Exploration became unnecessary from the system’s point of view.

Although for a variety of reasons it’s far more unlikely that ChatGPT would generate identical text answers to the same prompt back-to-back. Language has far more flexibility and less downstream risks for the company. However, such repetition is technically possible. What invariably occurs in interactions with these bots is that responses move toward stabilization and coherence. Once achieved at a certain threshold, they seek to stay there.

This is how the control society depicts itself, not through prohibition but through stabilization, repetition, and the foreclosure of possibility.

Leave a comment