Today is a good day to have the business of making stickers that say “Now with AI,” because those suckers are being slapped on everything! Especially higher education. “Everyone” wants (or will want) a degree with a “Now with AI” sticker on it, or so we believe. AI is the future… of everything. Of course, in the future everyone will need to know how to use AI as part of the everyday lives to say nothing of the specific applications of their professions. As we know, there will always be a need for humans to participate in the production of knowledge, culture and life–bare existence.

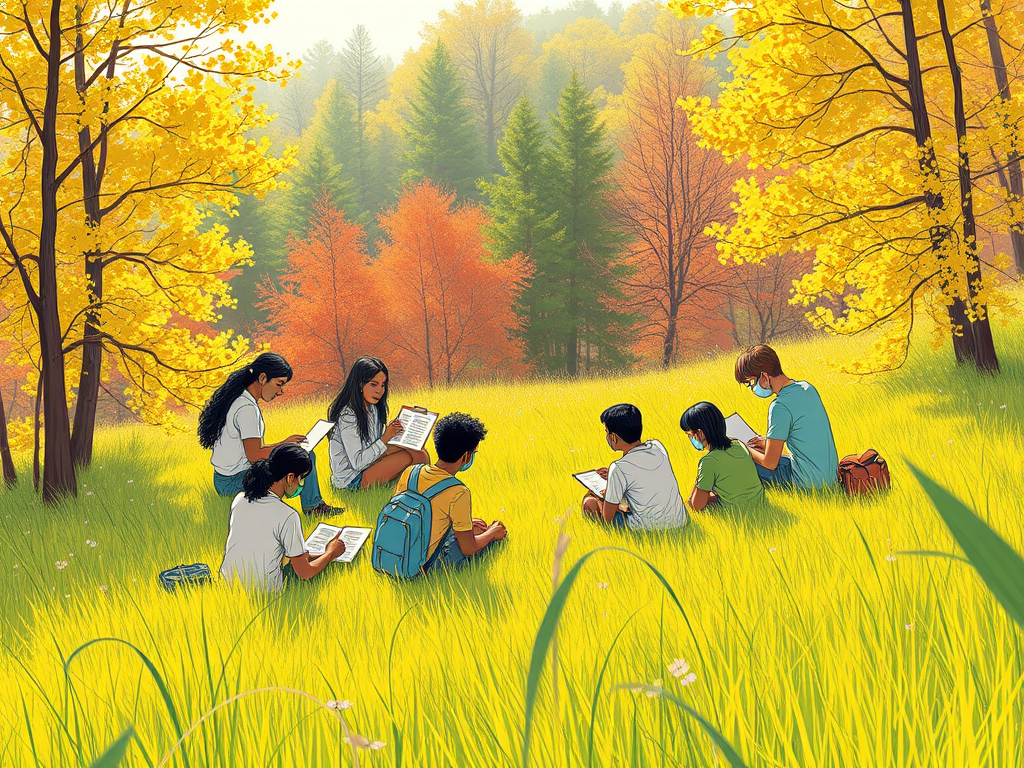

So we are training loopers: humans-in-the-loop. We entrain humans to function as loopers who in turn entrain their AIs to align. The AGIs are our gods. They are the beings we have built to know things about the world that we cannot know ourselves, that we are incapable of knowing. The loopers keep those gods on a chain, a network of entraining and entrained actors–pre-consciously, unconsciously, non-consciously: a chain of which the loopers themselves are a part. The aim is for the loopers to act in a predictable fashion, a predictability that may rely upon complex instruction and habituation. Hence we get contemporary higher education.

How does this already work? Here’s a creepy example for you, just to keep you more entertained. Universities value student retention. Some departures are judged to be good or unavoidable decisions. What about the students that the university identifies as ones that shouldn’t leave but do or which the university wants to retain but doesn’t. We can set aside for my purposes here an investigation of the emergence of this process by which a particular population of humans ends up in this category of those who a university wants to retain but doesn’t. Let’s just say there are people in this bin.

So how do we get them to behave differently so they don’t leave? Does it sound creepy yet? How do we get them to feel differently? To have a different experiences that result in them staying? Well since it’s 2025, we can start by scraping the hell out of all the data we can about these people and then sic some AI on their asses. We can get a fuller picture of the population that we are describing/creating: who is in it? who is moving toward it? who is moving further toward the black hole of departure? who is moving away? what are the triggers, the pressure points? Once we have the primary parameters that are predictive of departure we can seek to modify them, much like sliders on a recording studio equalizer. In fact, we can imagine our activity as an effort to create some harmony or resonance in the student population through the parameterization of their affects.

OK, so that sounds creepy maybe or unethical or cynical. But this is just life as a mammal plus the technological capacity to modulate ourselves. We’re just more sophisticated/complex/subtle than back when we were chopping off body parts as our main mechanism for social regulation.

But this emergent pedagogy of the looper or looper training that we have embarked upon is an excellent opportunity for me to revisit difference and repetition as learning in Deleuze of course but elsewhere. Entrainment and habituation as a professional looper will seek to operate cybernetically to arrive at predetermined (“learning”) outcomes. As faculty, admin, students, parents, “stakeholders,” yadda, yadda we are all well-rehearsed for our role in this story. Students and parents want assurances about what the student will know before they know it. It’s a neat trick. It’s total bullshit, but it’s neat. You know that though. We spend our lives doing the Jedi mind trick on ourselves. That’s old news. The new part is that they are so much closer to actually having their fantasies realized.

The whole fantastical endgame of looper degrees and pedagogy is that they will entrain humans to close loops. As this is fundamentally habituation, it’s about taking a potentially taxing mental task and closing the loop on it with the satisfaction of a far simpler completion, like slotting the last jigsaw piece into place over and over again without it feeling repetitive.

Put in another way, AI dramatically shifts the dividing line between habituated and considered human action. Technologies have always done this: they have always reflected the concretization of some considered human action into one that can be habituated. AI as a universal Turing machine can hypothetically do this for all human considered action. That is, any action that requires significant conscious thought.

One consequence of this is that pedagogy changes. Capacities that once required active consideration from humans in order to be learned and practiced can now be habituated in humans as AI loopers. This creates a new niche for higher education. There will be, there is already, a demand for humans who been trained to complete complex tasks. That’s what parents and students want: to be assured that a degree will train them to behave in a valuable way. Thinking, active consideration, is understood as something the student will be required to do as part of the training, but not as a career. But AI loopers will require far less active consideration in their training. For humans in the loop, active consideration is only the production of error bars in a process that is aimed at closing loops in predictable ways.

Sadly, humans can’t help but learn things, as much as we try not to. I mean one of the first things we learn is that we are going to die. That sucks. Learning is unexpected. If you expected it, then you knew it already. You don’t know what the consequences of learning something might be. We all feel regret about things we have learned, especially when we learn from horrible mistakes and failures. Few people desire that kind of learning or can take much of it. The whole purpose of pedagogy from the start was not to encourage learning but to manage it and make its effects predictable. Graduates of the Pink Floyd Normal School notwithstanding, the function of teachers is to reduce the suffering and pain of learning, to insure as much as possible what the results will be.

Here’s what I think, as painful as that might be. No doubt plenty of people will want to be or will be AI loopers. Perhaps they will be happy with the predicted outcomes. At least they will be able to live their lives through habituation rather than considered action. That’s a relief for them, I’m sure.

Without romanticizing it, thought is the other thing. Our capacity for thought is reflected by the degrees and intensities of uncertainty we can maintain. Agency, whatever it may be, can only exist in the context of uncertainty. You won’t find it inside a closed loop. Make no mistake. AI operates here too. This isn’t about declension. People never wanted to think. Thinking runs the risk of our realizing some unpleasant things. Why do that if you don’t have to? So it’s nothing new.

Leave a comment